Casual Info About How To Deal With Collinearity

Methods for dealing with collinearity should begin with increasing sampling size, since this should decrease standard error.

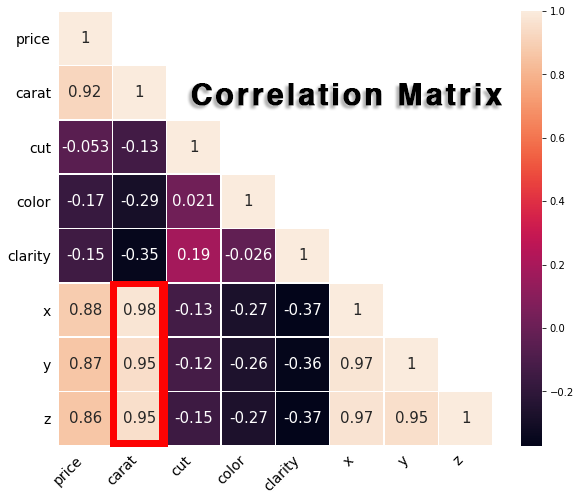

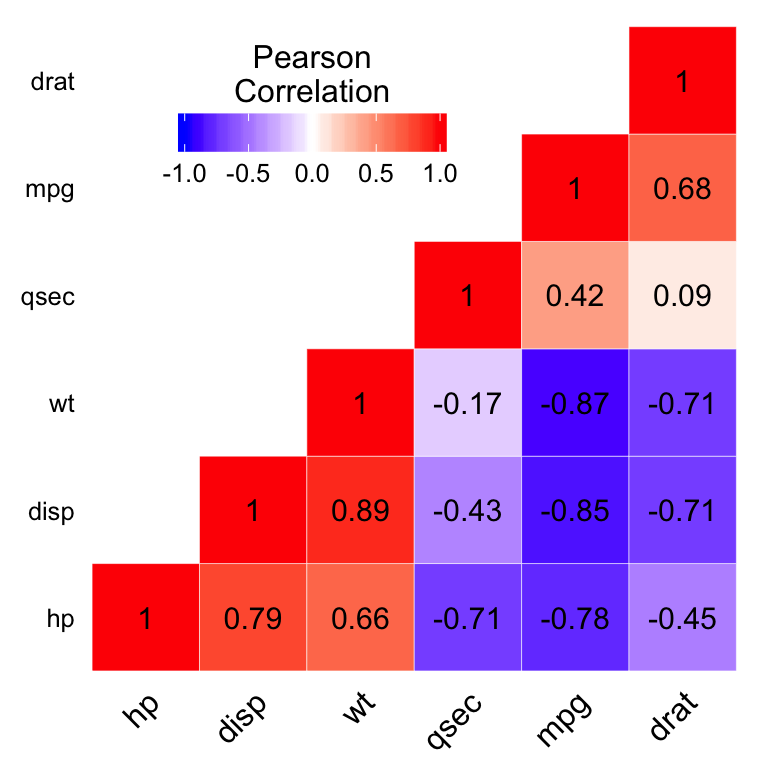

How to deal with collinearity. What you do have choice over is which variables to omit. Multicollinearity is a condition where a predictor variable correlates with another. Remove some of the highly correlated independent variables.

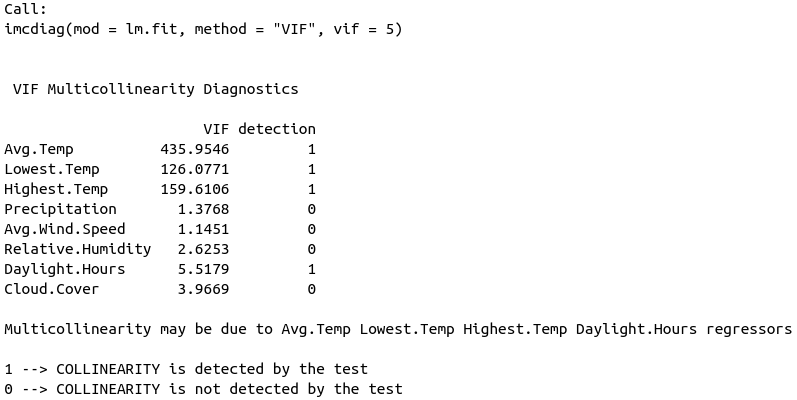

1 2 3 4 5 # dropping total_pymnt as vif was highest x.drop ( ['total_pymnt'],. Multicollinearity occurs because two (or more) variables are related or they. If you would like to carry out variable selection in the presence of high collinearity i can recommend the l0ara package, which fits l0 penalized glms using an iterative adaptive ridge.

To remove collinearity, we can exclude independent variables that have a high vif. This is the most straightforward solution to remove collinearity and oftentimes, domain knowledge would be extremely helpful to achieve the best solution. Linearly combine the independent variables, such as adding them together.

The best solution for dealing with multicollinearity is to understand the cause of multicollinearity and remove it. The potential solutions include the following: Correction of collinearity is more difficult than diagnosis.

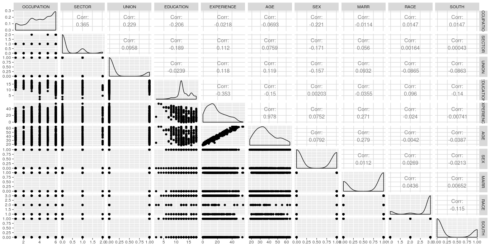

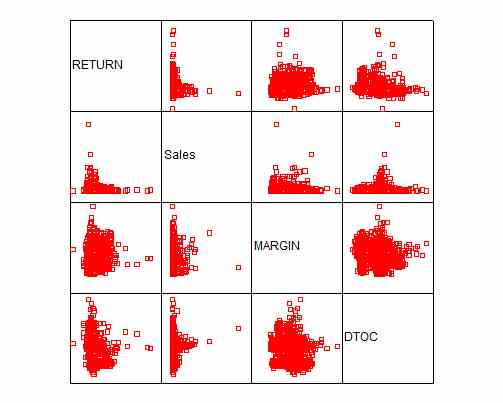

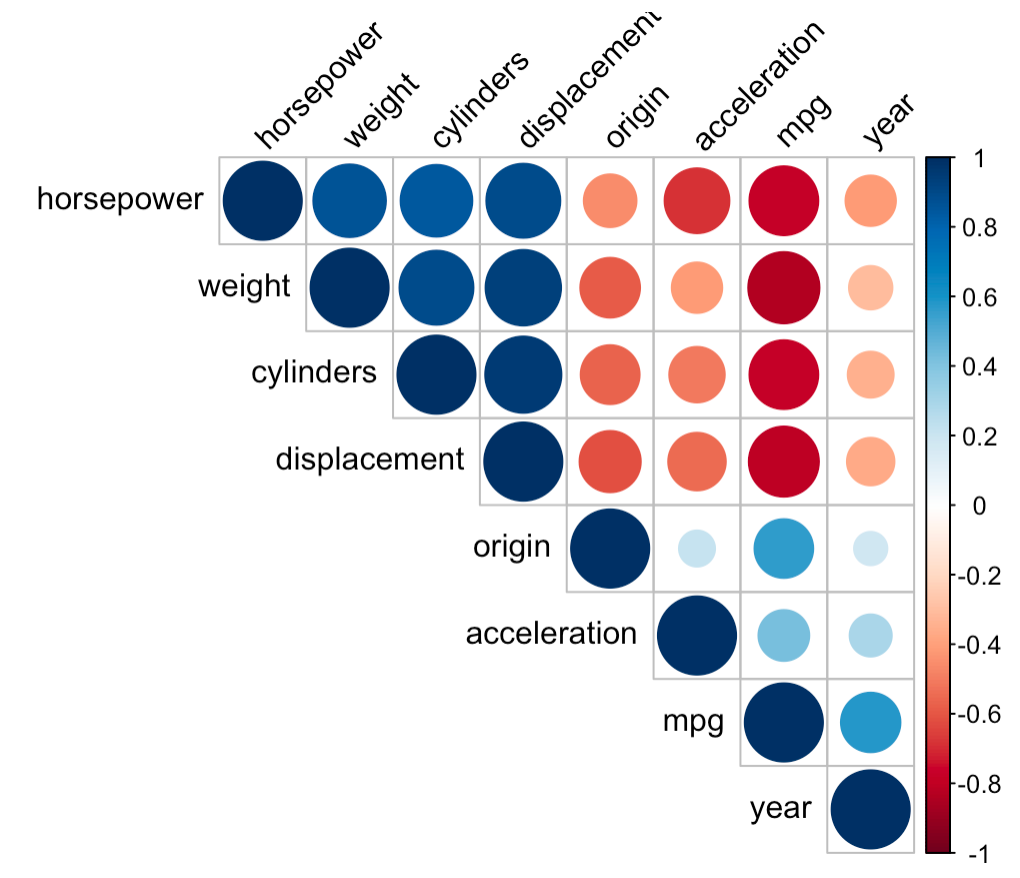

This video covers the topic of collinearity in the context of multiple linear regression in rcollinearity (also known as multicollinearity) is a very relevan. In order to detect multicollinearity in your data the most important thing that u have to do is a correlation matrix between your variables and if u detect any extreme correlations (>0.55). Removing multicollinearity is an essential step before we can interpret the ml model.

To reduce multicollinearity, let’s remove the column with the highest vif and check the results.